I’m involved in a debate over diet and health over at Dean’s and in the course of that debate, was encouraged to read a paper by Corr et al. that suggests low-fat diets are essentially useless for reducing heart disease. This post started out as a comment but it grew enough to warrant a post in it’s own right. So, let’s look at what the Corr paper is actually saying, shall we?

The international bodies which developed the current recommendations based them on the best available evidence[1-3]. Numerous epidemiological surveys confirmed beyond doubt the seminal observation of Keys in the Seven Countries Study of a positive correlation between intake of dietary fat and the prevalence of coronary heart disease[4] although recently a cohort study of more than 43,000 men followed for 6 years has shown that this is not independent of fiber intake[5] or risk factors. The prevalence of coronary heart disease has been shown to be correlated with the level of serum total and low density lipoprotein cholesterol (LDL) as well as inversely with high density lipoprotein.

So, high intake of dietary fat indeed has a positive correlation for coronary heart disease. Corr is conceding this at the very start!

Further, coronary heart disease is also indeed associated with high LDL and low HDL. So far I am not seeing any Cholesterol Conspiracy here… the ADA seems to be right on the ball.

So, we’ve already established that CHD is associated with high fat, high LDL, and low HDL. So, what’s left to argue about?

As a consequence of these studies, it was assumed that the reverse would hold true: reduction in dietary total and especially saturated fat would lead to a fall in serum cholesterol and a reduction in the incidence of coronary heart disease. The evidence from clinical trials does not support this hypothesis.

Hmm. Two sentences here. one about a reasonable inference from the conceded association between fat and LDL with CHD. But ok, let’s call the question of whether teh reverse is true, Question A – “does reducing fat and LDL in the diet reduce CHD?”

And then another sentence, about evidence from clinical trials not supporting that inference. What about those clinical trials, exactly?

It can be argued that it is virtually impossible to design and conduct an adequate dietary trial. The alteration of any one component of a diet will lead to alterations in others and often to further changes in lifestyle so it is extremely difficult to determine which, if any, of these produce an effect. Dietary trials cannot generally be blinded and changes in the diet of the ‘control’ population are frequently seen: they may be so marked as to render the study irrevocably flawed. It is also recognized that adherence to dietary advice over many years by large population samples, as for most people in real life, is poor and that the stricter the diet, the worse the compliance.

Ah. so the available evidence from clinical trials is fundamentally suspect to systematic error. Fair enough. So, any conclusions we make from them should be tempered with that, right?

(long analysis of clinical trials in literature follows)

The message from these trials is that dietary advice to reduce saturated fat and cholesterol intake, even combined with intervention to reduce other risk factors, appears to be relatively ineffective for the primary prevention of coronary heart disease and has not been shown to reduce mortality.

OK, so the trials focusing on low-fat diets alone didn’t show any primary prevention benefit. Well, see caveat above, right? (and Corr’s noted exception about the MRFIT study…)

However, what about secondary prevention?

well, good! But still, is there some reason that maybe we aren’t seeing better results here? Is diet necessary, or sufficient? Let’s look at studies that not only remove fat, but also add HDL:

The first successful dietary study to show reduction in overall mortality in patients with coronary heart disease was the DART study reported in 1989[20]. The three-way design of this ‘open’ trial compared a low saturated fat diet plus increased polyunsaturated fats, similar to the trials above, with a diet including at least two portions of fatty fish or fish oil supplements per week, and a high cereal fibre diet. No benefit in death or reinfarctions was seen in the low fat or the high fibre groups. In the group given fish advise there was a significant reduction in coronary heart disease deaths and overall mortality was reduced by about 29% after 2 years, although there was a non-significant increase in myocardial infarction rates. The reduction in saturated fats in the fish advice group was less than in the low fat diet group and there was no significant change in their serum cholesterol.

Finally, the more recent Lyon trial[21] used a Mediterranean-type of diet with a modest reduction in total and saturated fat, a decrease in polyunsaturated fat and an increase in omega-3 fatty acids from vegetables and fish. As in the DART study there was little change in cholesterol or body weight, but the trial was stopped early following a 70% reduction in myocardial infarction, coronary mortality and total mortality after 2 years.

In other words, adding HDL to your diet helps a lot, whereas reducing polyunsaturated fat (or just increasing fiber) still doesn’t seem to do anything. We’ve established that a modest increase in HDL can help. But have we established that a modest reduction in LDL will not help?

Unfortunately, the design and conduct of these trials are insufficient to permit conclusions about which polyunsaturates and other elements of these diets are the most beneficial. The long term effects of these trials[20,21] and the compliance with the dietary regimes remain to be seen.

So, we don’t really know if these studies answer that question. It’s possible that lowering LDL has a longer-timescale benefit than increasing HDL. These studies don’t answer the question either way, because of the limitations Corr concedes – certainly we haven’t proven that lowered LDL is not genuinely helpful yet.

Anyway, how much LDL was really reduced anyway?

An important aspect of the lipid-lowering dietary trials is that on average they were only able to achieve about a 10% reduction in total cholesterol. The results of recent drug trials have demonstrated that there is a linear relation between the extent of the cholesterol, or LDL, reduction and the decrease in coronary heart disease mortality and morbidity, and a significant effect seen only when these lipids are lowered by more than 25%[23].

Ahhhh. Corr goes on to quote a bunch of studies that show frankly awesome improvements in mortality using drugs to lower LDL by 25% or more.

(in other words, definitively proving that lower LDL does indeed reduce heart disease. We just answered Question A from above).

So, let’s summarize:

conceded by Corr at the outset:

– increased HDL reduces CHD.

– increased fat increases CHD.

– increased LDL increases CHD.

dietary trials:

– somewhat lowered LDL does not reduce CHD.

drug trials:

– significantly reduced LDL does reduce CHD.

caveats:

– dietary trials have systematic errors.

– long-term trials on reducing LDL have not been performed.

special note: The MRFIT trial follow up focused on reducing LDL diet alone, and did show reduced myocardial infarctions over a longer term.

My conclusion from this would be that a. increase HDL now for immediate benefit, and b. reduce fat and LDL in my diet for long term benefit. Seems obvious enough, and fully in accord with what the ADA recommends.

Corr’s conclusion?

diets focused exclusively on reduction of saturated fats and cholesterol are relatively ineffective for secondary prevention and should be abandoned.

umm.. what?!?!

This is where they cross over into vaccines-autism and flouridated water territory, frankly.

What would have made the Corr paper immeasurably stronger would have been for them to devise an experiment that would answer these questions and fill the gaps. That’s always my challenge to these self-styled “skeptics” of the scientific consensus. What’s the experiment you propose? What would you do to make your case?

That’s how science works. Theory drives experiment, experiment refines theory, and back again. If your claim is that available evidence (in this case, clinical trials) don’t support the contention, that’s not enough. You need to come up with an experiment that actually refutes the contention. Formulate your hypothesis and test it! Anything else is just nitpicking from the sidelines, which is how most of these agenda-driven meta-analyses end up reading.

Frankly, I am very much eager to be able to dispense with the low-fat, low-cholesterol crap. Here’s why in a nutshell.

So please, Dr Corr and anyone other “cholesterol skeptics” out there. Show me the proposal for your experiment, and I guarantee you the fast food industry will show you the money.

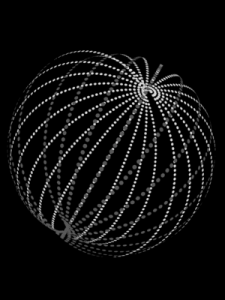

a wonderfully geeky debate is unfolding about the practicality of Dyson Spheres. Or rather, a subset type called a Dyson Swarm.

a wonderfully geeky debate is unfolding about the practicality of Dyson Spheres. Or rather, a subset type called a Dyson Swarm.