With all the talk of the cloud, it’s worth noting that for every user, the single most important droplet therein will always be their own PC. Cloudware is still far from feature-rich as software running on your own machine, and of course all the important user data still resides on the home node (and is unlikely to significantly shift online in a world where external USB hard drives approach the terabyte-capacity and $100 price point equally fast). And it should be noted that the home node will also have the raw speed and performance edge over the cloud in any mainstream computing scenario. Thus, cloudware that leverages the power of the home node will be the killer apps of the future, not purely-cloud run apps.

To get there, however, we need to tap the power of the home node va the browser, which remains the nexus of where cloud computing and flex computing intersect. Here’s how we get there:

Javascript creator and Mozilla CTO Brendan Eich has revealed a new project called IronMonkey that will eventually make it possible for web developers to use IronPython and IronRuby alongside Javascript for interactive web scripting.

The IronMonkey project aims to add multilanguage functionality to Tamarin, a high-performance ECMAScript 4 virtual machine which is being developed in collaboration with Adobe and is intended for inclusion in future versions of Firefox. The IronMonkey project will leverage the source code of Microsoft’s open source .NET implementations of Python and Ruby, but will not require a .NET runtime. The goal is to map IronPython and IronRuby directly to Tamarin using bytecode translation.

A plugin for IE will also be developed. The upshot of this is that Python and Ruby programming will become available to web applications run through the browser, on the client side. Look at how much amazing functionality we already enjoy in our web browsers thanks to Web 2.0 technology, which is AJAX-driven (ie, javascript). Could anyone back in 1996 imagine Google Maps? Hard-core programming geeks who understand this stuff better than I do should check out Jim Hugunin’s blog at Microsoft about what they have in mind; it’s heady stuff. But fundamentally what we are looking at is a future where apps are served to you just like data is, and your web browser becomes the operating system in which they run. I can’t even speculate about what this liberation from the deskbound OS model will mean, but it’s not a minor change.

Still, this all is going to run on the home node, and not in the cloud. That’s the key. There’s only so much you can do, and will be able to do, on vaporware 🙂

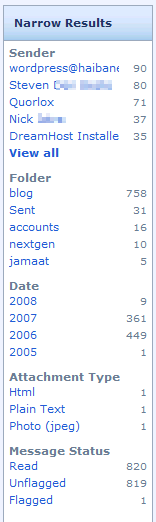

I’m starting a new category, called “cloudware” which is how i intend to refer to software that runs in the cloud. This will be my way of documenting what cloudware I actually use and fine useful.

I’m starting a new category, called “cloudware” which is how i intend to refer to software that runs in the cloud. This will be my way of documenting what cloudware I actually use and fine useful.